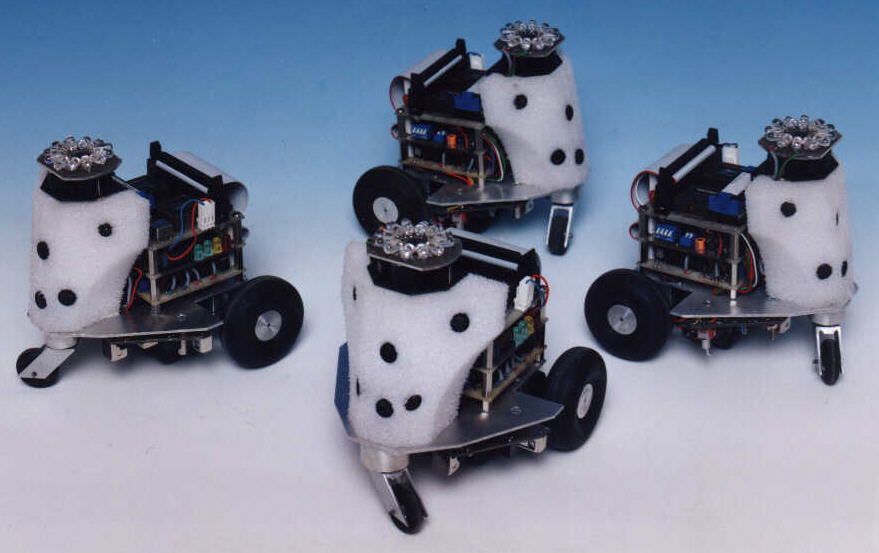

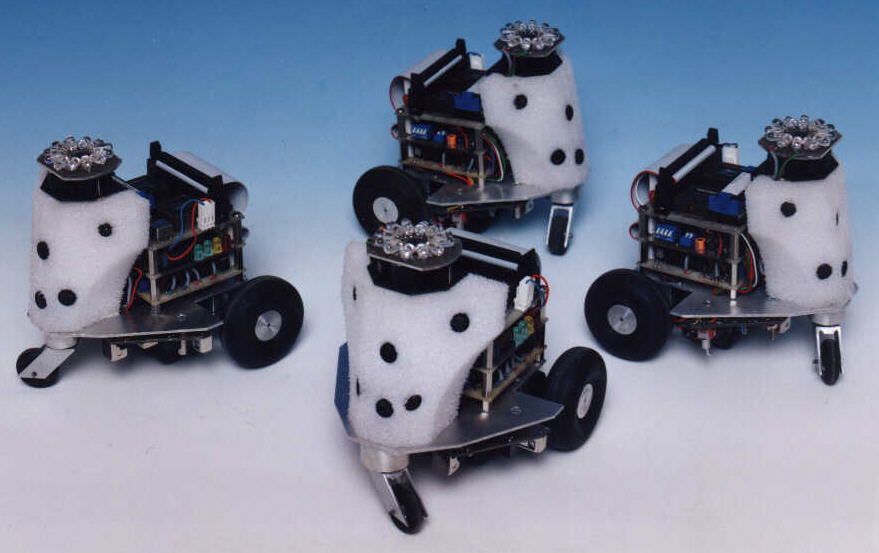

Four of the communicating Dwarf robots

The communicating Dwarfs

(Note: The work described on this page was carried out at the

University of Reading in the Department of

Cybernetics)

Four of the communicating Dwarf robots

The Robots

A group of five autonomous mobile robots were constructed which are equipped

with an infrared communication and localisation system as well as ultrasonic

sonar for detecting obstacles. The obstacle detection system consists of three

sets of ultrasonic sonar transducers; one set looking forward, one set looking

to the front-left and the other set looking to the front-right. Provision for a

fourth set of transducers is included, but these were never required. Ultrasonic

sonar systems operate by transmitting a short pulse of ultrasonic sound, and

timing how long it takes to bounce off an object back to the receiver. The time

taken for the signal to return gives a measure of the range to that object,

since the speed of sound is approximately constant in air. This sonar system

returns only the range to the nearest obstacle from each set of transducers. To

allow the robots to detect close obstacles, echoes have to be detected whilst

the ultrasonic pulse is being transmitted, thus requiring a high threshold. For

the detection of objects further away, a much lower threshold is required to

allow for the large signal loss (as stated by the 1/(range)4 law).

The detection of both near and distant obstacles is accomplished by using a

varying threshold system. This threshold is initially large, but decreases with

time to a pre-set minimum. A time-out system is used to determine if no objects

are within range. Each set of sonar transducers is scanned ten times per second,

and has a range of 30mm to 1m with a resolution of about 3mm.

An active infrared system is used for inter-robot communications. This is

frequency division multiplexed, with each robot having its own channel. The

carriers of these channels range from 220kHz to 400kHz, and the transmission

medium is 950nm infrared light. In order to obtain more transmission power, and

hence range, from inexpensive Light Emitting Diodes (LEDs) at these frequencies,

a ring of twelve 60 degree LEDs are used. Each robot also has four photodiodes

arranged 90 degrees apart (each with a half power angle of 120 degrees). This

combination of LEDs and photodiodes allows transmissions to be received

regardless of the angles of rotation of the robots. Information is transmitted

by frequency modulating the carriers, with the decoding being carried out using

an off-the-shelf radio frequency (RF) integrated circuit. Data is transceived at

1200 baud (120 bytes per second) using the RS232 standard. The range of this

system is over 6 metres for localisation, and over 12 meters for data

communication, with each of the LEDs being driven by 50mA. The transmitter,

therefore, requires a maximum current of about 200mA, at 5V whilst the receiver

requires less than 20mA, again at 5V. The communications system can scan ten

channels through each of its four photo-sensors at a rate of ten times each

second.

Physically the robots are small, having a width of 140mm, a length of 130mm and

a height of 140mm. Each robot weighs less than 600 grams. Movement is provided

by two small d.c. motors with in-line gearboxes each driving one of the rear

wheels. The front of each robot is supported by a single castoring wheel. At

present, motor control is provided by open-loop pulse width modulated

controllers providing several different speeds (up to just over 1ms-1)

and directional control. Each robot is equipped with a single 8MHz Z80 CPU. The

actions of the robot are controlled by the Z80 which is also used for sensor

reading under interrupt. As a mater of fact, for both the flocking and group

learning experiments no more than 50% of this processor power is required.

Rechargeable NiMH 4.8V@1.8Ah batteries provide over five hours of life per

charge.

Flocking

Flocking is a behaviour that is commonly found in nature. Animals sometimes

flock for protection from wind or low temperatures but mainly for the protection

that a flock offers from predators. When a predator attempts to attack a flock

all the animals within the flock will flee for their lives, thus confusing the

predator who now has to try to target one of many animals. Therefore the chance

of any individual being caught and killed by a predator decreases with

increasing flock size. In order to maintain a flock animals have to remain in

close proximity to each other whilst changing direction and speed. The members

of the flock also have to avoid collisions with each other and obstacles within

their environment. Flocking behaviour can be found in birds, land animals (i.e.

cows where the flock is known as a herd or sheep), and fish (where it is known

as a school). Flocking presents an interesting problem in the field of robotics

since it requires two opposing goals, the robots have to get close to each other

but also have to avoid collisions. With many robots in close proximity, their

sensors will receive more noise than when the robots are not so close together,

for example false sonar echoes can be received from other robots, thus giving

range errors. For these reasons flocking and the required sensor fusion formed a

non-trivial task with which to test the robots.

Real time flocking was achieved reactively using a subsumption like

architecture, but with a simple dynamic form of leadership. The four levels of

control in this architecture are:

Avoiding objects and wandering is achieved using

information from the ultrasonic sonar, with the position and distance to other

robots being determined from the infrared communication and localisation system.

The localisation system is used to form an attractive force which brings the

robots together, whilst the sonar is used to act as a repulsive force to prevent

collisions. To help prevent head-on collisions only the rearward LEDs are

switched on, thus the robots are only attracted towards the rear of each other.

When a very close object is detected the avoidance behaviour forces the robots

to slowly reverse, thus helping to avoid deadlocks and maintain the minimum

separation distance between robots. The communication system is used by each

robot to inform the other robots whether it is a leader or a follower.

The selection of the leader has to be dynamic, because like Reynolds's boids

(and real flocks of animals) the flock should be able to split up to go around

obstacles, and rejoin once past the obstacle. If a leader is pre-defined this is

not possible. Also, since our robots operate in a finite bounded environment

there would be problems when the flock meets a boundary of the environment. In

this case the pre-defined leader would have to fight its way through the other

robots. Thirdly, if the pre-defined leader should stop working (i.e. die or is

killed) then the whole of the flock would also fail. Clearly such a pre-defined

leader should not be utilised.

Under a system where any robot can become a leader and can relinquish leadership

when required, one or more leaders can coexist. In this system the flock can

split up into two smaller flocks to go around both sides of an obstacle and then

rejoin once past the obstacle. If the leader should get trapped between other

robots, then by definition it is now in the flock and therefore simply gives up

leadership. One of the robots on the outside of the flock will take over the

leadership and the rest will follow it. To ensure that this new leader does not

simply turn around and rejoin the main body of the flock there is a short period

of time for which it is not allowed to relinquish leadership to any robots that

are followers. However, even during this period a new leader will still

relinquish leadership to another leader in front of it.

Initially, if a leader could be seen the following robots would follow it and

ignore any other robots in the flock, however the other robots would still be

seen as obstacles to avoid collisions. This strategy produced a very poor

flocking behaviour. In an open space the robots would follow each other in a

straight line instead of flocking and would still tend to clump for short

periods of time. By assigning a higher priority to following the leader, than

the other following robots, the clumping problem is eliminated and with five or

more robots true flocking patterns will emerge in open space. In order that this

priority system is flexible to varying numbers of robots, the weighting given to

following the leader is equal to the number of other visible robots, in front of

any given robot.

From the point of view of any single robot, when no other robots are visible in

front, it can become a leader. As leaders, robots wander around by moving

forward in a straight line until an object is encountered, upon which they turn

away from it. As stated above, to inhibit a leader from rejoining the flock

immediately after leaving it, leaders do not start looking for other robots

until after a few seconds of taking leadership. After this delay, when one or

more other robots are visible ahead, leadership is relinquished. Robots that are

followers head towards the greatest density of visible robots, with a higher

priority being assigned to following the leader. This attracts robots towards

each other, thus forming flocks. If as a follower, any robot sees a flock in the

distance, it will catch up with them by increasing its speed. Whilst any robot

is in a flock it tries to match the speed and direction of its nearest

neighbours.

A flock forming sequence at one frame every two seconds

A typical flocking sequence at one frame every two seconds

Click here for an mpeg

movie of the robots flocking in real time (2MB)

Shared experience learning

The ability to learn is an essential component of intelligent behaviour in human

society. Individual humans do not need to learn everything by discovering it

from scratch for themselves. Instead, they learn from their peers and teachers

by exchanging the knowledge and information that they have acquired. Learning

from peers does nor occur only in humans. It has also been found in some

invertebrates, in many birds, aquatic mammals and of course primates.

When reinforcement learning is used to solve problems that are composed of many

different stages, learning is like a search process, in which the agent searches

the world for states that maximise reward and thus minimise punishment. The time

this search takes depends strongly upon the size and structure of the state

space and upon a priori knowledge encoded in the learning agent's initial

parameters. When a priori knowledge is not available, the search through the

state space is unbiased and can be excessively long. In nature, co-operative

mechanisms help to reduce the size of the search area, and hence the search

time, by providing the learner(s) with auxiliary sources of experience.

Furthermore, such mechanisms can allow agents to successfully learn problems

that would otherwise be too difficult. For these reasons we developed, tested

and showed that sharing experiences between learning robots does indeed lead to

faster and more robust learning.

Our learning algorithm uses a reinforcement technique based on sets of fuzzy

automata. It is similar to one step Q-learning, except that the learning

function operates directly on the "best" actions as opposed to the expected

reward. The task of each robot is to learn to associate the "best" motor action

for its current situation so that it moves around whilst avoiding obstacles. If

the motor speeds are limited to full speed forward, full speed backwards and

stop then with two motors there are nine different possible output actions. With

three channels of sonar each giving an 8-bit range value there are 224

input states. Since we wanted to run many trials, preferably before retirement,

the input space was mapped down to five input states that represent the

different circumstances the robot can find itself in:

It should be stated that this learning strategy does

not attempt to build a map of the environment, instead each robot learns how to

react to obstacles in the five different situations above. Each of these

situations, that is position of the nearest obstacle, has a separate fuzzy

automaton associated with it. Each automaton is a set of motor actions, and a

set of probabilities for taking the associated action. When presented with a

particular situation the action that a robot takes is defined by the set of

probabilities associated with that situation's automaton. At the start of the

learning algorithm these probabilities are all the same, thus for any particular

situation initially every action has an equal chance of being selected.

The beginning of each learning cycle starts with each robot examining its sonar

system to detect where the nearest obstacle is. This information is used to

establish which situation the robot is in and hence which automaton to use. A

weighted roulette wheel technique is then used to randomly select the most

appropriate action for this situation, given its current probabilities. The

action with the highest probability is the most likely to be chosen. This method

is similar to the techniques used in genetic algorithms. Initially since the

probabilities are equal the robots move around randomly. Over time "better"

actions will have their probabilities of being selected increased and

inappropriate actions will have their probabilities of being selected decreased.

Given enough time, the robot should select the "optimal" actions for each

situation.

The chosen action is executed for a short period of time. At the end of this

execution time, the sonar system is read again, so that the chosen action can be

evaluated. In order to evaluate the chosen action, a definition of which actions

are good and which actions are bad is required. These were chosen to be general,

so that they would not give the robot any information. Since the overall aim of

the robots are to move forward whilst moving away from close objects the

following three rules are used:

If the action was successful, its probability of being

selected is increased. If the action was unsuccessful, its probability of being

selected is decreased. In both cases, the other probabilities of the chosen

automaton are adjusted in order to keep the total of the probabilities constant.

The whole learning cycle is now repeated, but this time the sonar data that was

used to evaluate the robot's last action is used to decide which situation it is

now in.

For full two and four way learning, each robot has to transmit the position of

the object to which it reacted (i.e. case 1 to 5), the action that it chose

(i.e. 0 to 8) and how good or bad that action was (i.e. the Alpha factor). To

allow for the low rate of data transmission and the scanning of three other

robots, six sets of this data are compressed into one packet of data along with

a start of packet or synchronisation code, an incremental packet ID and a two

byte check sum. The ID code allows for the detection of missed packets since if

the ID of the current packet is not one greater than the last packet then one or

more packets of data have been missed. On average this loss of packets is less

than 2% for two way learning and 5% for four way learning. The main adaptive

loop of the program is:

Comparisons of the average learning rates for single robot, dual robot and four

way shared experience learning (the units of time are approximately seconds)

For further information on the robots hardware, flocking

both with and without leadership, and shared experience learning and its results

see my publications.

Back to my home page.